Spark Reading From a Directory Reads the Files Mulitple Times

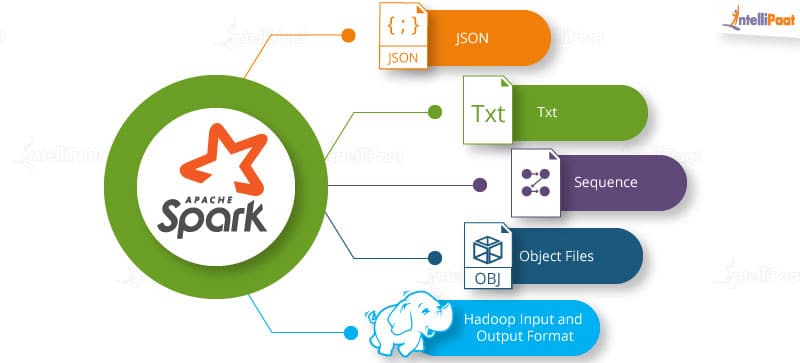

Spark supports multiple input and output sources to save the file. Information technology can access data in input format and output format functions using Hadoop map-reduce, which is available in nearly file formats and file systems such as Text Files, JSON Files, CSV and TSV Files, Sequence Files, Object Files, and Hadoop Input and Output Formats. Let us brainstorm

File Formats

- Text Files

- JSON Files

- CSV and TSV Files

- Sequence Files

- Object Files

- Hadoop Input and Output Formats

File Systems

- Structured Data with Spark SQL

- Apache Hive

- Databases

File Formats

Spark provides a simple manner to load and salvage information files in a very big number of file formats. These formats may range from beingness unstructured, similar text, to semi-structured, like JSON, to structured, like sequence files. The input file formats that Spark wraps are transparently handled in a compressed format based on the file extension specified.

Interested in learning Apache Spark? Click here to learn more from this Apache Spark and Scala Training!

Watch this video on 'Apache Spark Tutorial':

Loading and Saving Your Information in Spark Loading and Saving Your Information in Spark

Text Files

Text files are very simple and convenient to load from and save to Spark applications. When we load a unmarried text file equally an RDD, then each input line becomes an element in the RDD. It can load multiple whole text files at the same time into a pair of RDD elements, with the primal beingness the proper name given and the value of the contents of each file format specified.

- Loading the text files: Loading a single text file is every bit simple as calling the textFile() part on our SparkContext with the pathname placed next to the file, as shown below:

input = sc.textFile("file:///dwelling house/holden/repos/spark/README.md") - Saving the text files: Spark consists of a function called saveAsTextFile(), which saves the path of a file and writes the content of the RDD to that file. The path is considered equally a directory, and multiple outputs will be produced in that directory. This is how Spark becomes able to write output from multiple codes.

- Example:

result.saveAsTextFile(outputFile)

JSON Files

JSON stands for JavaScript Object Notation, which is a calorie-free-weighted data interchange format. It supports text but which can be easily sent and received from a server. Python has an inbuilt bundle named 'json' to support JSON in Python.

- Loading the JSON Files: For all supported languages, the approach of loading data in the text class and parsing the JSON data tin can be adopted. Here, if the file contains multiple JSON records, the programmer volition accept to download the entire file and parse each one by ane.

- Saving the JSON Files: In comparison to loading the JSON files, writing to information technology is much easier as, here, the developer does not accept to worry about the wrong format of data values. The same libraries tin exist used that were used to catechumen the RDDs into parsed JSON files; however, RDDs of the structured data will be taken and converted into RDDs of strings.

Want to grab more than detailed knowledge on Hadoop? Read this extensive Spark Tutorial!

Become 100% Hike!

Principal Well-nigh in Demand Skills Now !

CSV and TSV Files

Comma-separated values (CSV) files are a very common format used to store tables. These files have a definite number of fields in each line the values of which are separated past a comma. Similarly, in tab-separated values (TSV) files, the field values are separated by tabs.

- Loading the CSV Files: The loading procedure of CSV and TSV files is quite similar to that of the JSON files. To load a CSV/TSV file, its content in the text format is loaded at kickoff, and then information technology is processed. Like the JSON files, CSV and TSV files also have dissimilar library files, simply it is suggested to employ only those corresponding to each language.

- Saving the CSV Files: Writing to CSV/TSV files are also quite like shooting fish in a barrel. However, as the output cannot have the file name, mapping is required for amend results. I like shooting fish in a barrel way to perform this is to write a office that can convert the fields into positions in an array.

Sequence Files

A sequence file is a flat file that consists of binary key/value pairs and is widely used in Hadoop. The sync markers in these files allow Spark to observe a detail point in a file and re-synchronize it with record limits.

- Loading the Sequence Files: Spark comes with a specialized API that reads the sequence files. All nosotros have to do is phone call a sequence file (pat, keyClass, valueClass, minPartitions), and access tin be obtained from SparkContext.

- Saving the Sequence Files: To salve the sequence files, a paired RDD, along with its types to write, is required. For several native types, implicit conversions betwixt Scala and Hadoop Writables are possible. Hence, to write a native type, nosotros have to salvage the paired RDD past calling the saveAsSequenceFile(path) function. Then, we accept to map over the information and convert information technology before saving it if the conversion is not automatic.

Object Files

Object files are the packaging around sequence files that enables saving RDDs containing value records only. Saving an object file is quite elementary every bit information technology only requires calling saveAsObjectFile() on an RDD.

Exist familiar with these Top Spark Interview Questions and Answers and get a caput get-go in your career!

Hadoop Input and Output Formats

The input split is referred to equally the data nowadays in HDFS. Spark provides APIs to implement the InputFormat of Hadoop in Scala, Python, and Java. The one-time APIs were Hadoop RDD and Hadoop files, but now the APIs have been improved and the new APIs are known as newAPIHadoopRDD and newAPIHadoopFile.

For HadoopOutputFormat, Hadoop takes TextOutputFormat in which the key and value pair are separated through a comma and saved in a office file. Spark has the APIs of Hadoop for both MapRed and MapReduce.

- File Pinch: For virtually of the Hadoop outputs, a compression code can exist specified which is easily accessible. It is used to compress the data.

File Systems

A wide array of file systems are supported by Apache Spark. Some of them are discussed beneath:

- Local/Regular FS: Spark can load files from the local file system, which requires files to remain on the same path on all nodes.

- Amazon S3: This file system is suitable for storing large amounts of files. It works faster when the computed nodes are inside Amazon EC2. Notwithstanding, at times, its performance goes down if we opt for the public network.

- HDFS: It is a distributed file organisation that works well on article hardware. It provides high throughput.

If yous want to know about Steps for the installation of Kafka, refer to this insightful Blog!

Structured Data with Spark SQL

Information technology works effectively on semi-structured and structured data. Structured data can be defined equally schemas, and information technology has a consequent set of fields.

Apache Hive

1 of the common structured data sources on Hadoop is Apache Hive. Hive tin can shop tables in a variety and different range of formats, from plain text to column-oriented formats, inside HDFS, and it also contains other storage systems. Spark SQL tin can load whatever amount of tables supported by Hive.

Databases

Spark supports a wide range of databases with the help of Hadoop Connectors or Custom Spark Connectors. Some of them are JDBC, Cassandra, HBase, and Elasticsearch.

Intellipaat provides the most comprehensive Cloudera Spark Course to fast-track your career!

Source: https://intellipaat.com/blog/tutorial/spark-tutorial/loading-and-saving-your-data/

0 Response to "Spark Reading From a Directory Reads the Files Mulitple Times"

Post a Comment